结合本地和闭源大语言模型快速搭建属于自己的UI界面

见字如面,与大家分享实践中的经验与思考。

背景

之前我的文章有介绍如何快速搭建本地大语言模型:

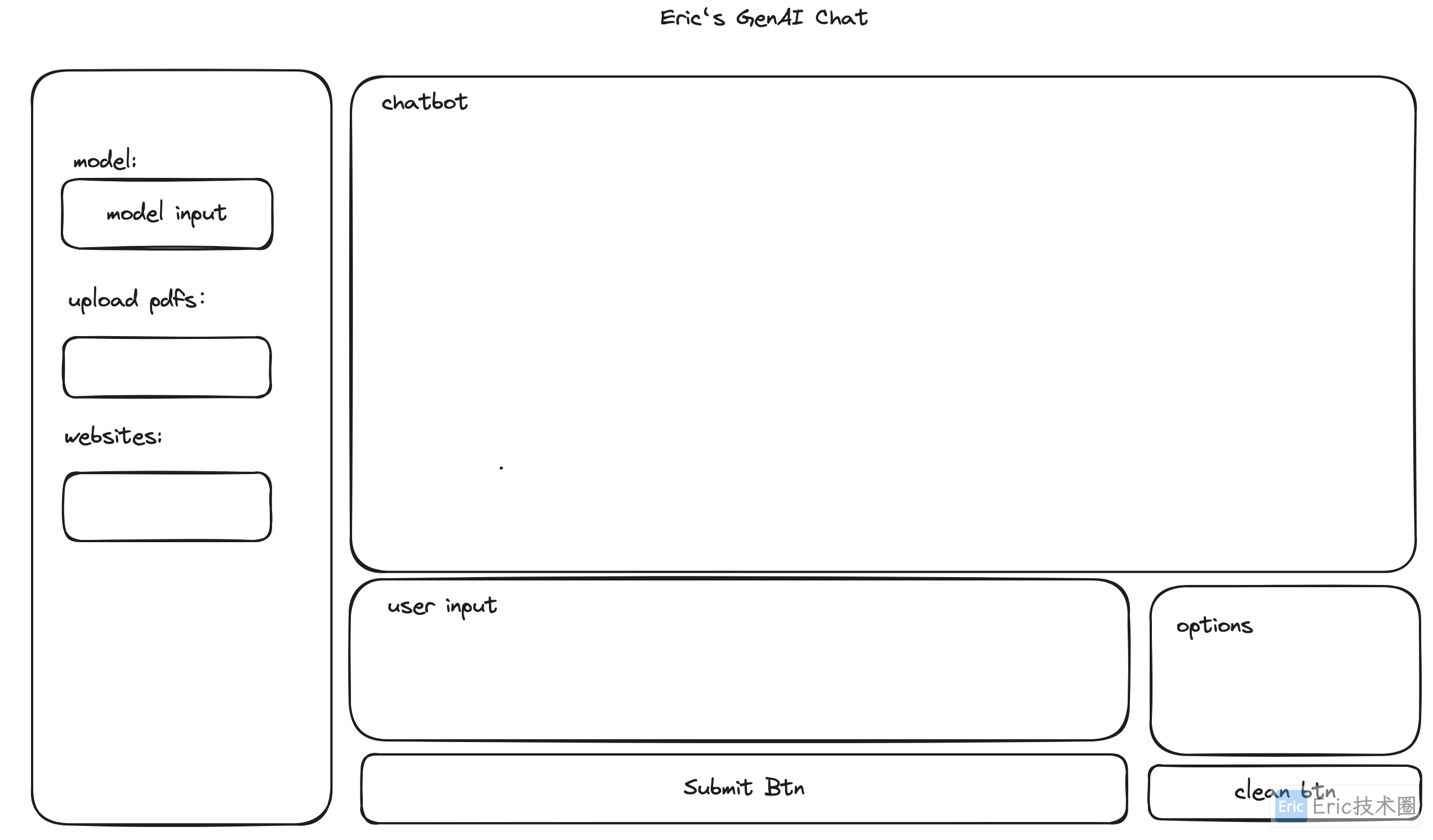

本地搭建了很多开源大模型,同时也有一些闭源付费大模型,如:ChatGPT、Claude3等,需要使用不同的方式去调用,不是很方便,所以我准备按照这样的思路搭建一个ChatBot UI界面去方便我后续AIGC的研究,下面是我的草图构思:

第一阶段先实现多model的切换,当前包含:闭源模型,如:ChatGPT系列、Ollma本地大语言模型、本地量化模型(ChatGLM3-6B)等,后续也可加入更多的模型。说干就干!

实现

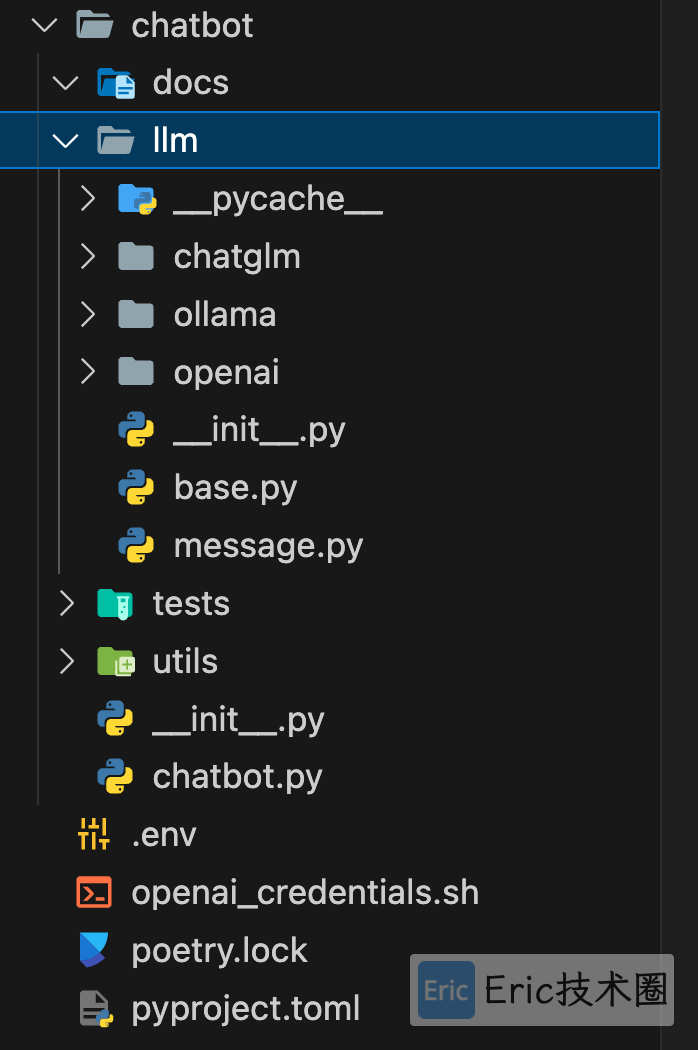

首先梳理python项目的结构,参考如图:

编写完访问LLM代码后,使用Gradio快速搭建Chatbot UI界面,对于不怎么熟悉Python的人也能快速上手,源码如下:

import gradio as gr

from llm.base import LLM

from llm.message import Message

from llm.openai.chat import OpenAIChat

from llm.chatglm.chat import ChatGLMChat

from llm.ollama.chat import OllamaChat

import os

from dotenv import load_dotenv, find_dotenv

from typing import Optional, List, Iterator, Dict, Any, Mapping, Union

# read local .env file

_ = load_dotenv(find_dotenv())

api_key = os.getenv('OPENAI_API_KEY')

api_base = os.getenv('OPENAI_API_BASE')

# select models

llm_clients: Dict[str, LLM] = {}

chatgpt_models = ["gpt-3.5-turbo", "gpt-4-vision-preview", "gpt-4"]

chatglm_models = ["chatglm3-6b"]

ollama_models = ["openhermes", "qwen:7b", "llama2:7b", "mistral:7b", "gemma:7b"]

models = chatgpt_models + chatglm_models + ollama_models

def switch_model(model_name):

print("switch to model: " + model_name)

return []

def get_llm_by_name(model_name):

llm: LLM = None

if model_name in llm_clients:

llm = llm_clients[model_name]

else:

if model_name in chatgpt_models:

llm = OpenAIChat(model=model_name, api_key=api_key, base_url=api_base)

elif model_name in chatglm_models:

llm = ChatGLMChat()

elif model_name in ollama_models:

llm = OllamaChat(model=model_name)

# update dict

if model_name is not None and llm is not None:

llm_clients[model_name] = llm

print(llm_clients)

return llm

def predict(input, model_name, chatbot, messages, max_length, top_p, temperature):

llm = get_llm_by_name(model_name)

if llm is None:

raise Exception("LLM not set")

chatbot.append((input, ""))

user_prompt_message = Message(role="user", content=input) if input else None

messages.append(user_prompt_message)

request_kwargs = dict(

max_tokens=max_length,

top_p=top_p,

temperature=temperature

)

response = ""

for chunk in llm.response_stream(messages, **request_kwargs):

response += chunk

chatbot[-1] = (chatbot[-1][0], response)

yield chatbot, messages

return chatbot, messages

def reset_user_input():

return gr.update(value="")

def reset_state():

return [], []

with gr.Blocks() as demo:

gr.HTML("""<h1 align="center">Eric's ChatBot</h1>""")

with gr.Row():

with gr.Column(scale=1):

model_selection = gr.Dropdown(models, label="select models:", value="gpt-3.5-turbo")

with gr.Column(scale=4):

chatbot = gr.Chatbot()

with gr.Row():

with gr.Column(scale=4):

user_input = gr.Textbox(show_label=False, placeholder="Input...", lines=8)

submitBtn = gr.Button("Submit", variant="primary")

with gr.Column(scale=1):

max_length = gr.Slider(0, 4096, value=2048, step=1.0, label="Maximum Length", interactive=True)

top_p = gr.Slider(0, 1, value=0.7, step=0.01, label="Top P", interactive=True)

temperature = gr.Slider(0, 1, value=0.7, step=0.01, label="Temperature", interactive=True)

emptyBtn = gr.Button("Clear History")

messages = gr.State([])

model_selection.change(switch_model, inputs=model_selection, outputs=[messages])

submitBtn.click(

predict,

[user_input, model_selection, chatbot, messages, max_length, top_p, temperature],

[chatbot, messages],

show_progress=True,

)

submitBtn.click(reset_user_input, [], [user_input])

emptyBtn.click(reset_state, outputs=[chatbot, messages], show_progress=True)

demo.queue().launch(share=False, inbrowser=True)

结果验证

欢迎关注我的公众号“Eric技术圈”,原创技术文章第一时间推送。

License:

CC BY 4.0